“A cut section of the sun, showing the spots, Luminous Atmospher, and the opaque body of the sun” An abridgment of Smith’s Illustrated astronomy, 1850. This is exactly the kind of cool images hidden in these books.

We now have a massive wealth of digitized books. Between HathiTrust, the Internet Archive’s Open Library, Google Books and the other range of organizations that have gotten into digitization we have millions upon millions of digitized books. I don’t know about you, but (in general) I’m far less interested in reading these books than I am in skimming them for cool images. The same thing is true of digitized newspapers.

Those books are loaded with amazingly cool images, prints, engravings, woodcuts, pictures, plates, charts, figures and other kinds of diagrams. I tend to keep track of these sorts of things with Pinterest. (My Pinterest is full of images I’ve plucked out of IA books I’m skimming for these kinds of images.) I imagine there are a lot of folks out there who would be happy to play at this kind of visual treasure hunt. Find images, inside digitized items and describe them. I think it would be really neat if we had some basic sort of tool that would let folks who find these things pull them out and describe them so that other folks could find them too and use them as points of entry to the books.

I’d love to scheme with folks about how we could go about systematically tapping into this resource. How can we go about slurping these images out of the books, and getting them described in ways that make the reusable for any number of purposes? I could imagine something like Pinterest, but that pushed the items back into the Internet Archive or uploaded them to WikiSource and kept a link between the original resource and let someone describe the individual image and keep it connected with the information on the book or newspaper it originally appeared in.

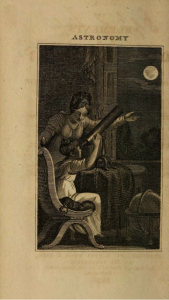

How about this frontspiece, from the 1823 Elements of Astronomy showing a women teaching a young girl to use a telescope to study the moon. It shows up as visual evidence in Kim Tolley’s “The Science Education of American Girls” as evidence for the argument that in the early 19th century science was for girls while classics was for boys.

Or heck, it might be something one could pull together with some kind of marker in things posted to Pinterest. I imagine there are far more cleaver ways to go about this and that is what this session would be about.

I picture us hashing out how something like this might work. We could sketch out what things we might hook together to do this sort of thing.

Here are some things we might talk/work through.

- What would the ideal user experience for this kind of thing look like?

- What would be the best way to stitch something like this together?

- Should some group host it, or is there a distributed way to do something like this?

- What groups or organizations might be interested in being involved?

What do you think? Feel free to add other questions we might broach in the session. Oh, and there is nothing stoping folks from blogging out their ideas in advance. Feel free to write up as comments your ideas about how this might work best, or some other use cases you might imagine. Also, just feel free to weigh in and say if you think something like this would be useful.

Interesting idea, Trevor. Harvesting the images and providing access to them via a single interface would be a fabulous thing for so many people. Images hidden in text is a problem and, as you note, there is a plethora of them available thanks to massive book scanning efforts. I found it noteworthy that you state that you often examine the texts merely to examine the images. One of my gripes within the library and information science world is the near obsession with text to the detriment of providing access to all other formats. This has begun to shift noticeably in the past decade, thankfully, but it hasn’t yet addressed the issue you bring up here.

From the way I see it, there are two issues at play here. The first is that many of these images will not be described in much detail (if at all) within the texts. This will mean that identification and description of the images will be problematic. The second issue is that the way that images can be described is variable. What is most important to mention? This might shift from person to person, or even be varied with a single person on different days. Having people with specific knowledge of the text, time period, and etc. helping with the description would certainly help things along, however. The legal and technical perspectives would seem to be easy part.

If you are looking for folks to work on this with you, I am up for it. My research is all about visual information and image description and so I am very much interested in the topic.

Thank you Trevor,

I am in; I also collect images on Pinterest for fun and for scholarship, and the pop history site Anywhen is also a cool place to gather and extract interesting materials for academic use. I’d love to learn of other methods by which to do this sort of treasure hunt. It is like finding old letters in your neighbor’s attic – in fact it is – a trove of stuff by amateur historians and ordinary hoarders saved my dissertation! This is a great idea.

Boy, I love this idea, but even crowdsourcing it seems daunting. So many books, so many images inside them . . .

It seems like it shouldn’t be horribly difficult to algorithmically determine images from text pages by looking for traits like ‘many parallel rows on bitonal image’ or ‘is colorful.’ If there was ever a catalog record created for a text, you’d also have some rough estimates for metadata/subject tags.

Here’s an example of a project that algorithmically yanked images from archive.org scans (albeit with 80’s game magazines, so the assumptions don’t quite work with 19th century texts): cosmicrealms.com/blog/2012/12/31/c64-magazine-game-wallpaper-generator/

It strikes me that there is a particular market/demand for this kind of thing: anywhere there are antiques or rare books for sale, there are invariably reams of plates cut out of old books, now sold as “singles” to be framed or something. In other words the image has a value well beyond that of whatever original volume housed it. Here of course we would be talking about a digital representation rather than a physical artifact, but I suspect there could still be tremendous interest in such an archive, particularly if it’s rich in metadata, tags, etc.

Great idea, Trevor – both in terms of exposing the vast array of wonderful archival/digitized images and also to help further the visual research agenda. Developing theme-based collections could help scope the project a bit. As you know, the LIS community has had success utilizing crowd-sourced description for image collections so that might be an option. I could assist with user design requirements in terms of trying to figure out what makes an image “more usable” as visual evidence (I explore many of these topics in my dissertation research as we have discussed elsewhere.) Lev Manovich and his team have created an interesting tool for image aggregation with image plot: lab.softwarestudies.com/p/imageplot.html

Would love to join such a session Trevor after what we discussed last week in our Italian Society for the Study of Photographs www-sisf.eu annual meeting when we pointed out how many newspapers and scholarly journals today are still using images in a passive way and not because images are tellingl us something by themselves, they speak their own language. For instance there’s no trace of any meta-information on pictures published in newspapers, not even the name of the photojournalist … So your idea to crowdsource images is nice, it is what google images or, better Tineye, is doing with the web answering our queries. Nice would be to fix it in a database for further retrievals with a caption and contexts, this is the added scholarly value that we all wait for on the web I suppose. Could I say that this info -also aimed at by Sean Takats above- is even more valuable sometime than accessing images that rarely are “speaking by themselves” -I mean for scholarly purposes- with no context nor story to interpreting them. Anyway just to tell you how much I would like to be there, wotkink a lot myself on how the digital turn has influenced photography and how same photographies have been “moving” in web sites. Maybe we could do something similar for the NCPH Monterey conference in March 2014 ?

This is a great application of a common computer vision problem; challenging. Examples that are similar in flavor include these projects:

Labeling Face Images in the Wild Link

Label Me: Link

Learning from a Visual Folksonomy: Automatically Annotating Images from Flickr Link

Unlike these projects which are more general, the data from digital books should have more structure and information allowing for generation of good descriptive texts.

I too am very interested in this session. I’m also curious how meta data can be completely applied as any given image can have multiple meanings based on context/audience.

Funny you should mention this, since we have a grant-funded project at the Folger right now to digitize and index 10,000 images from 17th-century English books, and ONLY the images. Scans from high-contrast microfilm of most of the texts are already available through EEBO, with transcriptions becoming available through EEBO-TCP, but the visual language in EEBO is silenced. Of course, ideally, we’d scan a whole book and not just its engraved frontispiece, but 10,000 cover-to-cover shots from books won’t get us a corpus of images any time soon.