We now have a massive wealth of digitized books. Between HathiTrust, the Internet Archive’s Open Library, Google Books and the other range of organizations that have gotten into digitization we have millions upon millions of digitized books. I don’t know about you, but (in general) I’m far less interested in reading these books than I am in skimming them for cool images. The same thing is true of digitized newspapers.

Those books are loaded with amazingly cool images, prints, engravings, woodcuts, pictures, plates, charts, figures and other kinds of diagrams. I tend to keep track of these sorts of things with Pinterest. (My Pinterest is full of images I’ve plucked out of IA books I’m skimming for these kinds of images.) I imagine there are a lot of folks out there who would be happy to play at this kind of visual treasure hunt. Find images, inside digitized items and describe them. I think it would be really neat if we had some basic sort of tool that would let folks who find these things pull them out and describe them so that other folks could find them too and use them as points of entry to the books.

I’d love to scheme with folks about how we could go about systematically tapping into this resource. How can we go about slurping these images out of the books, and getting them described in ways that make the reusable for any number of purposes? I could imagine something like Pinterest, but that pushed the items back into the Internet Archive or uploaded them to WikiSource and kept a link between the original resource and let someone describe the individual image and keep it connected with the information on the book or newspaper it originally appeared in.

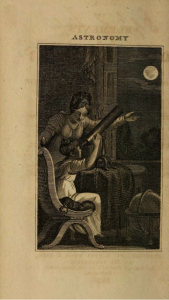

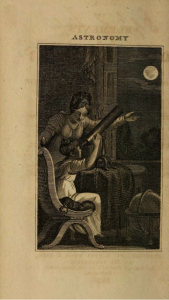

How about this frontspiece, from the 1823 Elements of Astronomy showing a women teaching a young girl to use a telescope to study the moon. It shows up as visual evidence in Kim Tolley’s “The Science Education of American Girls” as evidence for the argument that in the early 19th century science was for girls while classics was for boys.

Or heck, it might be something one could pull together with some kind of marker in things posted to Pinterest. I imagine there are far more cleaver ways to go about this and that is what this session would be about.

I picture us hashing out how something like this might work. We could sketch out what things we might hook together to do this sort of thing.

Here are some things we might talk/work through.

- What would the ideal user experience for this kind of thing look like?

- What would be the best way to stitch something like this together?

- Should some group host it, or is there a distributed way to do something like this?

- What groups or organizations might be interested in being involved?

What do you think? Feel free to add other questions we might broach in the session. Oh, and there is nothing stoping folks from blogging out their ideas in advance. Feel free to write up as comments your ideas about how this might work best, or some other use cases you might imagine. Also, just feel free to weigh in and say if you think something like this would be useful.